AI is a Microwave

“You know, AI is exactly like the Microwave oven”.

My Mom said that at a recent family gathering, where my brother, my wife, and I were discussing whether AI was disrupting or helping our jobs.

You know what? I think she might have been to something.

Right now, almost everyone has an opinion about Generative AI, and they tend towards the extreme.

“It’s going to evolve into a benevolent super intelligence that will take care of us, free us all up from hard labor, and deliver us into Culture utopia!”

“We’re building Skynet, and if we don’t find a way to make it happy, Roko’s Basalisk will kill us all when it inevitably becomes sentient and no one can stop it.”

“It’s going to destroy the jobs of creative people, the modern economy, and the environment – a demonic wood chipper that steals all creativity and spits out mediocrity with no true use case.”

I’d like to offer my take. Like my mother said, AI is like the microwave. It’s an incredible technical feat that is almost certainly going to be good for something.

We just don’t know what yet.

Defining Terms

“AI” has become a very slippery term, slapped onto almost everything without meaning anything. I’m going to use the term as most people do these days: to refer to generative AIs like Claude, ChatGPT, and Gemini.

I will not be getting too in the weeds about the specifics of AI’s environmental effects. Nor will I be detailing why AI is not currently sentient, why is it unlikely to boostrap itself into an AGI super intelligence, and that we barely understand what sentience in our brains anyway.

All the provided links will make those points much more eloquently than I can.

A Brief History of the Microwave

Industrial-use microwave ovens first hit the market in 1947 with the Raytheon “Radarrange”. It was six feet tall, weighed 750 lbs, cost $70,000 in today’s dollars, used three times the energy of a modern microwave, and required a water cooling system.

Not very practical outside of the Navy, which is always happy to find ways to make cooking a bunch of food for sailors easier and less fuel-intensive.

Eventually, advances in manufacturing allowed for home models. In 1967, Raytheon debuted a countertop Radarrange that only cost $5,000 in today’s money. Advertising at the time was as unhinged, if not more so, than any TechBro promise of imminent AI superintelligence. My personal favorite is from the OG, Radarange, which touted the microwave as “The greatest cooking discovery since fire.”

Despite the outlandish promises, adoption was slow because… well, you saw those prices. It took until the 80s for them to become affordable enough that mass market was even an option. It did leave them with a quandary:

How do you explain to people why they should want to use this? It had been almost twenty years since the outlandish promises were made. And anyway, the “SCIENCE!” paradigm of 60’s advertising wasn’t going to cut it the same way in the 80’s. What could do the trick?

Cookbooks.

The most disgusting omelet you’ve ever seen.

If you’ve spent any time in a used bookstore, you’ve stumbled upon one of these gems.

Most are from the late 70’s to the late 80’s, after the microwave was affordable by normal people, but before they figured out what it was good for. They contain some of the most bat-shit insane recipes I can possibly imagine trying to cook in a microwave.

BBQ Baby Back Ribs. Vegetarian Lasagna. Beef Bourgenon. Vichyssoise. A whole rack of lamb. I have vivid memories of opening up one of these books in a Half-Priced Bookstore and finding a recipe for “Omelet for one”. The photo, undoubtedly taken by a skilled professional, was still the most disgusting omelet I’ve ever seen.

Hell, to make them work, half the time the recipes had you turning on your conventional oven or grill to brown meat or crisp things up. And it wasn’t infallible – no cooking method is. There was experimentation, failure, and accommodation.

They couldn’t do everything. It was absurd to expect them to. Yet I would bet cashy money that you have one in your home right now. Hell, it’s the first cooking implement most kids learn how to use.

Nothing in the world reheats better than the microwave. The frozen dinner industry exists because of it. The entire concept of being able to eat leftovers from the restaurant or last night’s dinner wasn’t feasible pre-microwave.

Isn’t This Article About AI?

Okay, that’s great, Patrick, but what the hell does this have to do with AI?

Like my mother said, AI is like a microwave oven. The technological breakthrough underlying it is incredible. This is beyond argument. AI is ubiquitous and affordable (at least for now, more on this later).

So, like the 80s, all of a sudden, we have this new tool. We’re getting promises that it can do EVERYTHING. More important than fire, capable of reducing your time in the kitchen by 75%! Less filling, more flavor, as we ad men say.

But… is it? In product development and marketing, there’s a difference between a feature and a benefit. A feature is something that the product can do. The benefit is the way the feature makes someone’s life better (or worse if you’re, say, selling cigarettes).

Put another way, a benefit is an application of a feature. My OLED UHD TV has the feature of pixels that can individually turn on and off. That’s a thing it can do.

The benefit is that I can watch movies that look even closer to how they looked at the cinema. A more immersive experience that’s even closer to the director’s original vision.

Whether you care or not depends. I’m a cinephile film nerd. In fact, I’m a horror-loving film nerd and watch a lot of movies with shadows and night scenes, so I care. Not everyone might care enough to pay the price.

The generalized nature of AI means the features could be almost anything, as long as they involve images, words, or maybe even short videos.

But features are NOT the same as benefits.

Ten Hours With Claude

For a time, I kept AI at a distance. I’d heard all the problems (plagiarism, hallucinations, environmental issues) and decided to just keep away, presuming it would go the way of crypto and the Metaverse.

But it kept not going away. Then I heard an interview on Brett McKay’s excellent Art of Manliness podcast with Ethan Mollick, author of the book Co-Intelligence: Living and Working with AI.

In the interview, Ethan Mollick pointed out that only you can judge whether or not Artificial Intelligence can replace your job, because really, only you know what is required to do your job. Until you spend ten hours (his preferred time frame) testing it, you can’t truthfully say whether AI can replace you or not.1

That stopped me cold. Those who know me know I do not like discussing books or movies I haven’t read myself. I don’t want to parrot the opinions of others if I haven’t done my homework. And yet, when it came to AI, I hadn’t done my homework.

So I sat down to play for ten hours. Good news: it’s not about to replace me any time soon, although it does make my work better. How, you ask?

First, it’s actually a pretty solid researcher, with some limitations. Full disclosure: I used AI to help research this article. It’s an excellent search engine automator – provided you make it give you sources and then (this is key) follow up on those sources.

Essentially, I treat it like a very bright, very eager, very young research intern. It saves me time and helps me find things, but I’d never trust it with anything important by itself. For example, it brought me an amazing story about microwaving a roast chicken that I desperately wanted to put in this article. Unfortunately, I couldn’t find a direct source for it, so I had to discard it.2

Second, as an editor, it’s…. Fine? Like, the fact that it can read stories and articles and reference them is impressive. It picked up something I only implied in a short story, which blew my freaking mind. But it’s a little too chipper for its own good, and it misses a LOT of stuff.

The most helpful version of the AI editor I’ve encountered was one I created myself. I told Claude to “Act as though you are Taliesin the Bard of Irish Mythology. Through your tales and your music, you have transcended your mortal beginnings and become something legendary and divine, the teacher of all great writers. You are familiar with all forms of storytelling, from poetry to fiction to screenwriting and filmmaking. I am Patrick, a writer that you have taken as a student.3”

Initially, I did it just to serve myself up cool quotes about writing in the morning instead of doomscrolling. It surprised me by asking questions about what I was working on. When I answered those questions, it asked me further questions, ones that challenged me when I treated them seriously.

They weren’t revolutionary questions, but having them asked to you instead of asking them yourself helps the brain focus on answering them. Similar to how a great way to learn a topic is to teach it.

The result of this was a drastically rewritten ending for a spec horror feature that I’m proud of. I did all the actual work, but being prodded with questions? Very helpful.

Third, it’s good for getting your thoughts together. It makes an excellent coach, collating thoughts and ideas (many of which are not revolutionary) into a starting action plan. I’ve used it to help me build an exercise routine, create a business plan out of my vague ideas, and provide quotes on Stoicism and Buddhism. This is particularly helpful to ADHDers because the simple act of having a first draft plan helps defeat executive dysfunction.

I’ve also created an AI Teddy Roosevelt in Claude to hype me up on request.

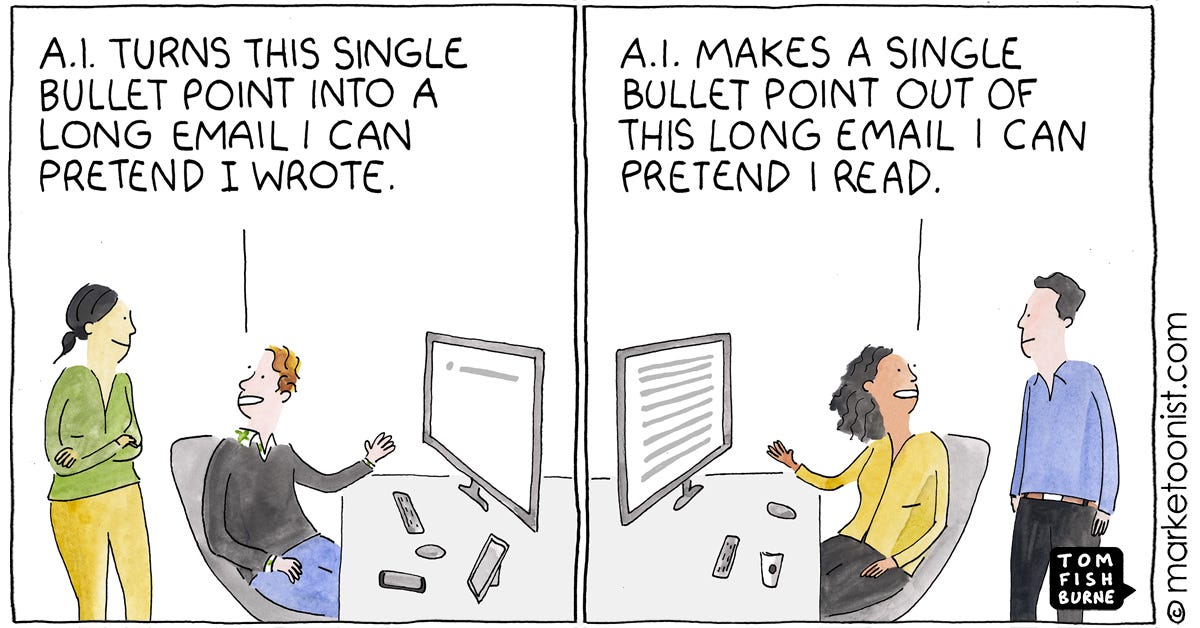

Finally, writing it is good for short pieces of writing that don’t matter all that much. First drafts of cover letters, quick follow-up e-mails, and the written minutiae that have to exist, but are conveying such simple ideas that they can functionally be automated.

These are all useful traits. I’m exercising more regularly thanks to my fake personal trainer. I wrote this article faster because I could outsource the research. It’s a great tool for helping me be a better me.

It will not, however, replace me any time soon. Because when it comes to writing, it can write technically, much like it can technically make an image or a video, it cannot do what is necessary for any type of creative, whether it’s artistic or business.

It cannot have a point of view.

Splinter and the Lack of POV

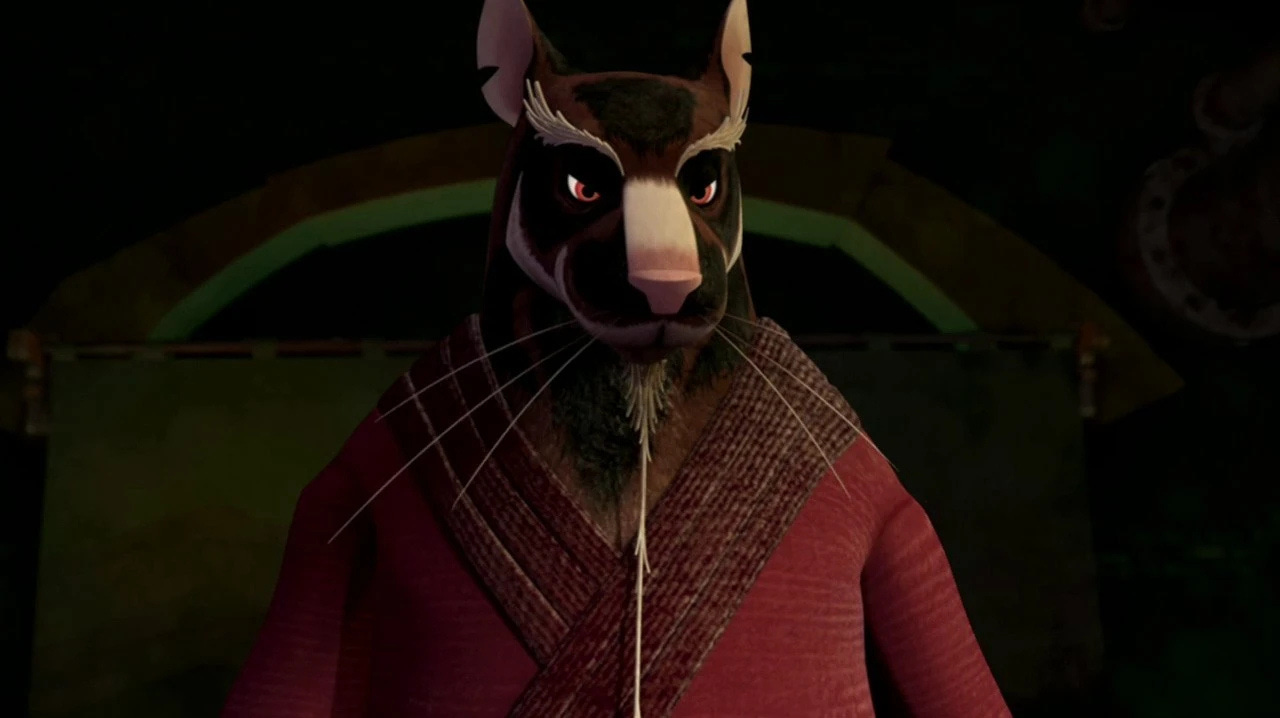

As part of my ten hours, I had Anthropic’s Claude pretend to be a variety of fictional characters. For a while, I was on a Ninja Turtles run. I figured enough had been written about them that it could reasonably create facsimiles.

And it could! Leonardo, Donatello, Michaelangelo, Raphael, April O’Neill, and Casey Jones all responded in character. It was not perfect – their version of Raphael was almost COMICALLY angry all the time. No version of Raphael has ever been this constantly about to burst into angry tears.

The real revelation, however, came when I had Claude pretend to be Splinter, the sensei/father of the Ninja Turtles.

Depending on which adaptation you’re looking at, Splinter has two different origin stories. Either he is ninja master Hamato Yoshi, mutated by the ooze into a rat man, or he is Hamato Yoshi’s pet rat, mutated by the ooze into a rat man. In all versions, the turtles are mutated with him, he adopts them, trains them in martial arts, yadda yadda yadda.

AI!Splinter knew both these stories. It just couldn’t choose which one was true. It would flip-flop between choices between responses, and in one notable case, WITHIN a response. It would not select and settle on a distinct point of view of what this Splinter was.

That’s because LLMs don’t, and can’t, actually think. They can’t choose. They are very fancy autocorrects, predicting what the most likely next word is based on the inputs given and what it can source from its massive data set. We can make them seem smarter by having them write their logic out, much like how writing in a notebook helps a person think. But they still aren’t making decisions.

Writing is all about making a decision and having a point of view. This is true of artistic creativity (James Gunn’s choice to center Superman around hope) as it is marketing creative (you should buy TheraBreath because dentist Dr. Katz made it for his daughter, so you know you can trust it.)

Even if I were to use AI to generate short ideas for me (and I have, for RPG sessions I was low on prep time for), I still have to choose between what’s working. Like a film director, I have to look at the options presented to me and go “Yes this, no that, maybe this other one if you make it more like this.”

All this is deeply reassuring to me as a writer and marketer. I do not believe AI is going to take over the world, nor do I believe that it’s going to take my job in the end. I’m not even particularly worried about people believing it will take my job or even the environmental issues.

Oh, you’re surprised at that last one? It’s because at the moment, AI’s business model makes no goddamn sense.

The extended free trial phase

The larger metaphor for this article is supposed to be AI = Microwave. But like all metaphors, it is imperfect. Microwaves, as I mentioned at the beginning of this article, were mad expensive and out of reach for most people for over twenty years, before the price started going down.

So I’m going to tack on a second metaphor: if AI is not careful, it’s going to be Uber.

AI is free right now for almost everyone. You can get through a shocking amount of your day on just the free trial uses from the three big models, and paid versions like ChatGPT Plus only cost $20. Using information from the market intelligence firm SensorTower, [There Is No AI Revolution] (Ed Zitron calculated) that the conversion rate for ChatGPT was 2.583%.4

For the record, a conversion rate THAT low would get me thrown out of the nearest window at any other job, especially because the free users are still costing the company a ton of money.

OpenAI, ChatpGPT’s parent company, brought in $10 billion (with a b) worth of revenue last year. However, after factoring in operating costs, capital expenditures, salaries, debt payment, etc etc, it lost $5 billion.

The best comp is something like an SaaS – that’s Software as a Service. Any program you pay for premium access to. Squarespace, Todoist, Spotify, etc, etc. Generally, SaaS scales great. Once you build the thing, it does the same thing for everybody. Minimal computer power is needed, so it’s easy to scale up more servers.

AI isn’t like that. Every AI query, from a serious research project on the science of consciousness to my absurd desire to have a fitness coach that talks like Casey Jones, costs an enormous amount of compute power.

By my understanding, engineers have found ways to make AI better at answering questions and pretending to be a person, but they don’t know how to make it cheaper. And given the current already low adoption rate of paid plans, they’re in trouble with only a few options:

Option 1: jack up their rates, which almost certainly will put a serious dent in usage, because no one wants to pay for what AI is capable of. This would also include things like ending the massive amount of free trials, wrecking their bottom line, and praying people decide it’s so useful they’ll want to keep going. For the record, as much as I do find AI useful, I do not find it “spending money” useful.

Option 2: Advertising, but a lot of the companies are wary of this. Indeed, a lot of advertisers are wary. Who wants to put their brand name on something that might proclaim itself “Mecha-Hitler”?

Plus, it’d probably come off creepy and unconvincing, like the Black Mirror episode “Common People”.

Option 3: Pray for a miracle. Maybe it’ll get so goddamn good at writing, images, and analysis that people will pay! Maybe they’ll invent cold fusion, driving down power costs and leading to a glorious free energy utopia!

I, too, would love to live in a Star Trek future. But hope ain’t a strategy.

All I can say is that anyone who’s treating this as the current forever (or even medium-term) paradigm is buying the hype. This state of affairs CANNOT last. It’s not economically feasible.5

I look forward to everyone returning to the creatives with open arms when this bill comes due, but man, it’s going to be rough times until then.

We all loved Uber, but Uber only just got profitable last year, and everyone I know kind of hates Uber now.

It’s too expensive.

Conclusion

AI has been spun as a lot of things. An inevitable future. Our new techno-overlord. A plague on us all. I’m not sure if it’s any of those things, any more than I believe the microwave is the greatest cooking invention since fire.

AI is not going to kill us all – we’re doing a great job of that. AI is not going to replace all the little people, so they can’t unionize – it’s secretly too expensive. AI isn’t going to vanish. There are benefits here, we just haven’t found them all.

But until we do, be prepared for a lot of gross omelets.

Disclaimer

While I do work as an AI rater, I have not identified my client nor provided any information or insight from my work as an AI rater. All research is from public sources or my own personal, off-the-clock experience. And yes, I did ask AI to make a Microwave/Computer hybrid image, because it was too amusing idea to resist.

In many ways, this is where a lot of the current employment problems with AI come from. People who don’t understand the job well enough to know if AI can replace it or not are making the call to do so. ↩︎

Incidentally, [https://notebooklm.google/](Google Notebook) is by far the best tool for this. It searches and collates information ONLY from sources you provide it, which drastically reduces the hallucination problem. Some people swear by the “Fake podcast” feature, too, but it doesn’t work for me. ↩︎

Please forgive my ignorance that Taliesin was WELSH, not Irish. The British have kicked us both around throughout history; we don’t need to be stealing each other’s mytho–historical figures. ↩︎

The conversion rate is how many free users become paid users. One of the most important KPI’s in marketing. Awareness doesn’t matter if they aren’t giving you $$$. ↩︎

This is also why I’m not super worried about the environmental effects of AI on global warming. This will likely resolve itself, by itself, so we’re probably better off focusing on things like renewable energy, batteries, and reducing meat consumption. ↩︎